Theory of attractor neural networks

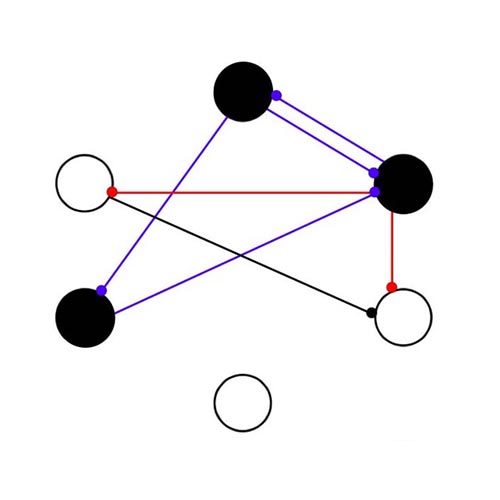

Attractor neural networks describe a class of networks whose dynamics is very peculiar: in the space of network states, neural trajectories are attracted towards specific locations. Many theoretical tools have been developed over the last 30 years to study their properties, contributing to demonstrate their relevance in modeling a variety of cognitive phenomenon such as working memory.

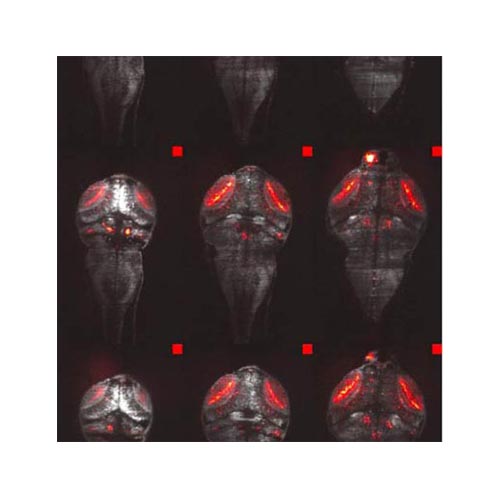

Analysis of biological

neural networks

Experimental techniques developing at a fast pace allow to record more and more neurons at increasing resolutions in behaving animals. This provides a unique opportunity to advance our understanding of brain functions, typically by i) mapping animal behavior onto recorded neural dynamics, and ii) accounting for this dynamics from the anatomy and physiology of the underlying neural substrate.

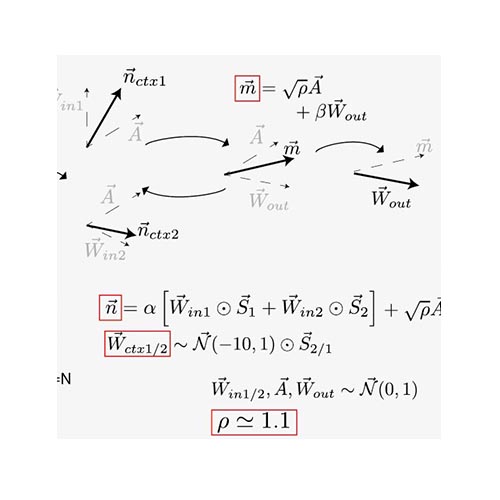

Analysis of artificial

neural networks

Recent advances in machine learning have allowed to train neural networks to perform a variety of tasks. These functional networks, which are the outcome of a training algorithm, are often called black-boxes and a major scientific challenge is to develop tools to open them. This is for instance crucial in scientific applications of machine learning, where interpretability of the behavior of a trained network can be required to gain insight into underlying mechanisms.